Should the future of intelligent machines be humane or humanising?

Public Launch of the Humanising Machine Intelligence Grand Challenge: Should the Future of Intelligent Machines be Humane or Humanising?

Presented by Professor Shannon Vallor, Regis and Dianne McKenna Professor of Philosophy at Santa Clara University in Silicon Valley, AI Ethicist and Visiting Researcher at Google.

In the coming decades, the spread of commercially viable artificial intelligence is projected to transform virtually every sociotechnical system, from finance and transportation to healthcare and warfare. Less often discussed is the growing impact of AI on human practices of self-cultivation, those critical to the development of intellectual and moral virtues. The art of moral self-cultivation is as old as human history, and is one of the few truly unique capacities of our species. Today this humane art has largely receded from the modern mind, with increasingly devastating consequences on local and planetary scales. Reclaiming it may be essential to averting catastrophe for our species, and many others. How will AI impact this endangered art? What uses of AI risk impeding or denaturing our practices of moral cultivation? What uses of AI could amplify and sustain our moral intelligence? Which is a better goal for ethical AI: machines that are humane? Or machines that are humanising?

Professor Shannon Vallor researches the ethics of emerging technologies, especially AI and robotics. She is the author of the book Technology and the Virtues: A Philosophical Guide to a Future Worth Wanting from Oxford University Press (2016), as well as the creator of Ethics in Tech Practice, a popular suite of online materials used by leading tech companies for in-house ethics training. Her many awards include the 2015 World Technology Award in Ethics and multiple teaching honours. She serves on the Board of the non-profit Foundation for Responsible Robotics, is a visiting researcher and AI Ethicist at Google, and consults with other leading AI companies on AI ethics. She regularly advises tech media, legislators, policymakers, investors, executives, and design teams on ethical and responsible innovation.

The Humanising Machine Intelligence Grand Challenge

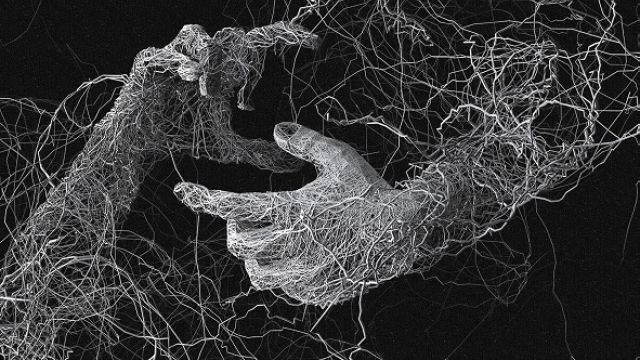

Every new technology bears its designers’ stamp. For Machine Intelligence, our values are etched deep in the code. Machine Intelligence sees the world through the data that we provide and curate. Its choices reflect our priorities. Its unintended consequences voice our indifference. It cannot be morally neutral. We have a choice: try to design moral machine intelligence, which sees the world fairly and chooses justly; or else build the next industrial revolution on immoral machines.

To design moral machine intelligence, we must understand how our perspectives reflect power and prejudice. We must understand our priorities, and how to represent them in terms a machine can act on. And we must break new ground in machine learning and AI research.

The HMI project unites some of The Australian National University’s world-leading researchers in the social sciences, philosophy, and computer science around these shared goals. It is an ANU Grand Challenge.

Location

Manning Clarke Hall, 153 University Avenue, 2600 Canberra

Speaker

- Professor Shannon Vallor